In powder-bed additive manufacturing (AM), the quality of the part begins with the quality of the powder. Methods for characterizing AM powder feedstock have typically relied on direct measurements of material properties such as particle size and aspect ratio distributions. However, a team of researchers at Carnegie Mellon University, led by professor of materials science and engineering Elizabeth Holm, have developed an automated method that is said to identify metal AM powders with 95 percent accuracy.

Using micrograph images of sample powders, Holm’s team has been able to teach a computer vision system to characterize material batches based on their qualitative, as well as quantitative, properties. A paper published in the Journal of the Minerals, Metals and Materials Society (JOM) details the system’s development and initial testing based on the characterization of eight metal powders.

The point of this classification exercise is not so much to demonstrate that the system can tell different powders apart, but that it could enable comparison to some established baseline. The ability to check a new batch of powder against previous batches would enable manufactures to more quickly qualify materials and more easily monitor changes. It may even be possible to associate a particular powder fingerprint with a certain behavior in the 3D printer and better predict (and adjust for) build outcomes in the future.

Teaching the Machine

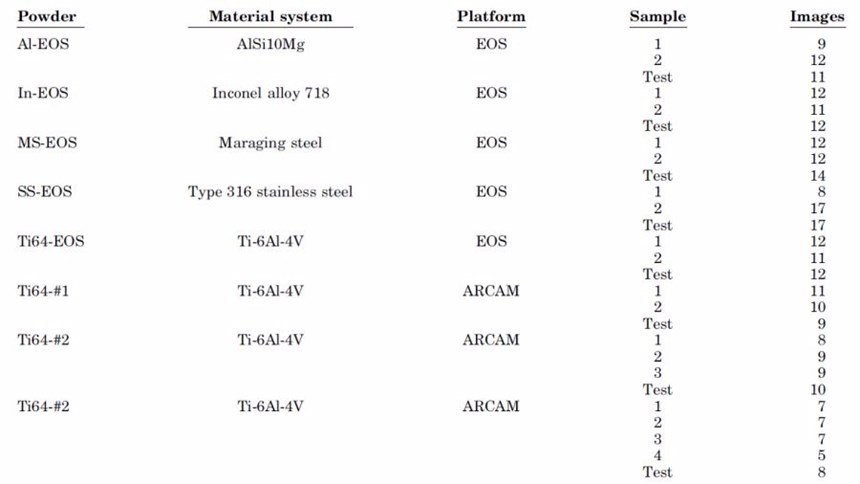

To develop the powder characterization system, the team worked with eight different gas-atomized metal powders, listed in Table 1. Five of these materials were supplied by EOS and intended for use in the company’s metal 3D printing systems: AL-EOS, In-EOS, MS-EOS, SS-EOS and Ti64-EOS. The other three samples were Ti-6Al-4V intended for use in Arcam systems; one of the batches was procured from Arcam while the other two were obtained from two different suppliers.

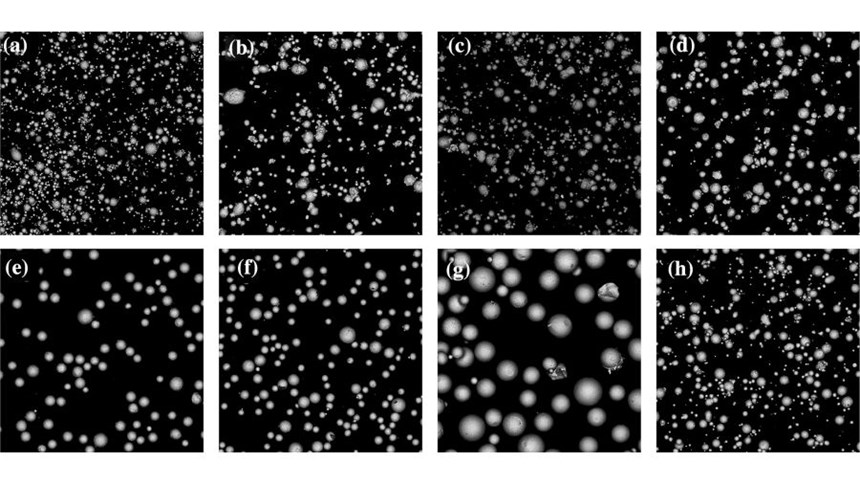

To gather samples of each powder, researchers shook each container (to prevent sample bias from powder settling) and then gathered a small amount of powder with a spatula. A thin layer of the powder was blown over double-sided carbon tape, and then the sample was cleaned with pressurized air to remove any loose particles. Researchers then took images of each sample using a microscope. A representative micrograph of each of the eight samples can be seen in Figure 2.

Researchers then adjusted the magnification of each micrograph so that the particles would appear to be similar in size across samples, and also erased the background of the images so that the computer would focus only on the powder itself. Removing these variables helps to prevent the system from being distracted or focusing on the wrong variables, Holm explains.

“The computer learns, but we don’t always know what the computer learns,” Holm says. “We want to try and prevent it from making distinctions based on the wrong data.”

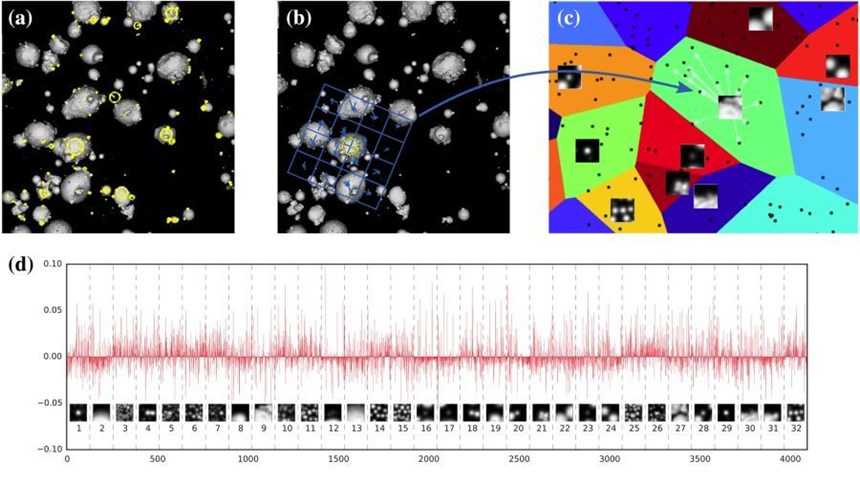

From these edited images, the vision system learned to characterize each of the samples in a three-part process (see Figure 3). First, the computer decides what to look at by identifying interest points, or visual features, in each image. Next, it numerically characterizes each of these features by associating them with the most similar “visual word”—a distinctive feature such as spherical particles or necks between particles. Thirty-two of these visual words were identified across the samples. Finally, the system groups the feature descriptors together to create a distinct “fingerprint” for each material.

This fingerprint offers a more complete image of the powder than could be gathered manually. Measuring particle size distribution is fairly easy, explains Holm, but previously there has not been a good way to measure or capture other, more qualitative aspects of powders such as surface roughness. These qualitative characteristics influence material flow, spreadability and other factors that affect the final build.

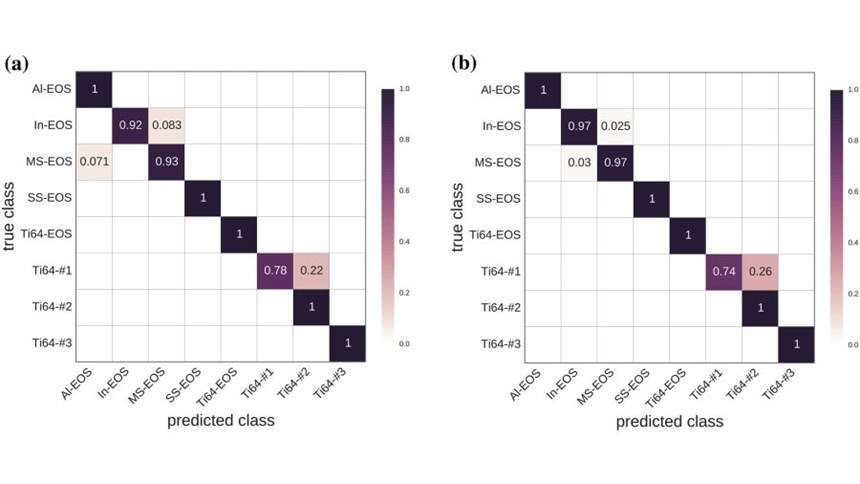

The team withheld one sample of each of the eight powders to be used for testing, and used the remaining samples as a training set for machine learning. When working with the training set, the vision system had a validation accuracy of 96.5 ±2.5 percent. As shown in Figure 4a, similarities between the MS-EOS and In-EOS powders and between the Ti64#1 and Ti64#2 powders caused the system to misclassify some samples.

When tested using the reserved samples, the computer vision system had an overall accuracy of about 95 percent, comparable to the training accuracy. Results are shown in Figure 4b. The same materials were misclassified in this test as a result of similar particle morphologies and particle size distribution. Two others were misclassified because of outlier test images, pointing to further need for statistically representative image sets.

The Human Factor

Though the vision system did make errors in testing, it far outperformed humans faced with the same task, says Holm. In some informal testing where humans were asked to sort powder images, subjects averaged just over 50 percent accuracy. Humans are good at picking individual features out of pictures, but not at determining subtle statistical differences, she explains. And a given micrograph could contain thousands of interest points that a human might identify as significant, but are time-consuming to analyze manually. With machine vision, these features can be analyzed quickly and objectively, no human judgment involved.

“Our goal is not to eliminate the human; it’s to empower the human to do something that requires interesting intellectual activity.”

But, Holm is quick to note that the purpose of her research is not to remove humans from the qualification process. Instead, this application of machine learning has the potential to relieve humans of repetitive work that doesn’t require their expertise.

“Our goal is not to eliminate the human; it’s to empower the human to do something that requires interesting intellectual activity,” Holm says. “Instead of an expert looking for an anomalous particle, we can let the computer do that. And then we can ask the expert, ‘What do we do about it?’ That’s a much more interesting question.”

In addition to alleviating the work of classifying materials, having a comprehensive fingerprint of a batch of powder would enable humans to make smarter decisions and build better parts. Manufacturers could easily measure how closely a new batch of powder resembles previous batches or monitor the changes in a recycled batch, and connect powder fingerprints to 3D printing results.

If a powder fingerprint is different than expected, it might be possible to change process parameters to compensate. Or, there might be ways of changing the powder itself (sieving to remove fines, for instance) to bring it into a qualified state. To make that happen, “What we need—desperately—is information that correlates the powder to outcomes,” Holm says.

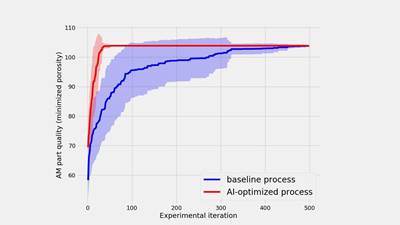

How far might machine learning go in additive manufacturing? According to Holm, the two are a natural pair.

“In additive manufacturing, we’re in a situation where, by the nature of the process itself, we are going to be given a lot of data,” she says. “What machine learning is really good at is taking data and making some sense of it—finding correlation and trends and directions.”

The application of machine learning will be key to raw material classification for additive manufacturing but also to the process control feedback loop, much in the way that autonomous vehicles learn from every drive they take. According to Holm, “That is our goal for additive as well: that the next build will be informed by all the builds before it.”

- Elizabeth Holm discusses three roles for computer vision in this video.

- More reporting on machine learning, artifical intelligence and additive manufacturing.

Related Content

CoreTechnologie Optimizes AI-Supported 3D Nesting Software for Higher Efficiency

The new version of the universal 3D printing software 4D_Additive 1.6 features an AI-supported nesting technology, which enables an average 30% increase in efficiency when operating SLS and MJF printers compared to the previous version and other common nesting tools.

Read MoreActivArmor Casts and Splints Are Shifting to Point-of-Care 3D Printing

ActivArmor offers individualized, 3D printed casts and splints for various diagnoses. The company is in the process of shifting to point-of-care printing and aims to promote positive healing outcomes and improved hygienics with customized support devices.

Read MoreLessons in Personalized Production From the 3D Systems Surgical Guide Process

Tailor-made manufacturing is one of AM’s richest possibilities, but the success factors inevitably draw on more than AM.

Read MoreHexagon Invests in Divergent’s Autonomous, Sustainable Manufacturing

The Divergent Adaptive Production System (DAPS) is a fully integrated software and hardware solution, creating a complete modular digital factory that combines AI-optimized generative design software, additive manufacturing and automated assembly to build lightweight automotive parts and frames.

Read MoreRead Next

With Machine Learning, We Will Skip Ahead 100 Years

Machine learning or AI will prove vital to the advance of AM. Computational power will enable additive to advance much faster than if it had been invented in an earlier time.

Read MoreVideo: 3 Roles for Computer Vision in Metal 3D Printing

Carnegie Mellon University professor Elizabeth Holm explains three ways computer vision can advance powder-bed metal 3D printing.

Read MoreAlquist 3D Looks Toward a Carbon-Sequestering Future with 3D Printed Infrastructure

The Colorado startup aims to reduce the carbon footprint of new buildings, homes and city infrastructure with robotic 3D printing and a specialized geopolymer material.

Read More

.jpg;width=70;height=70;mode=crop)