Artificial intelligence (AI) has undeniable usefulness for the advance of additive manufacturing (AM). The variables affecting a laser powder bed fusion build — laser power, hatch distance, gas flow and more — are simply too numerous to test for every single part that a user may want to print. At a certain point, it makes sense to digitize, to simulate, and to outsource the “thinking” to a computer rather than commit human minds and physical resources to this process discovery.

But what is the right way to go about building an AI for additive manufacturing? Past efforts have taken approaches rooted in machine vision or supervised learning — that is, feeding a model annotated examples to teach it to recognize errors and abnormalities the same way that its human teacher would. As an AI strategy, this can be effective if time-consuming, but as a broadly applicable solution for advancing AM technology, it misses the forest for the trees.

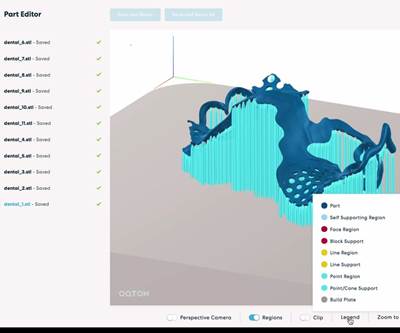

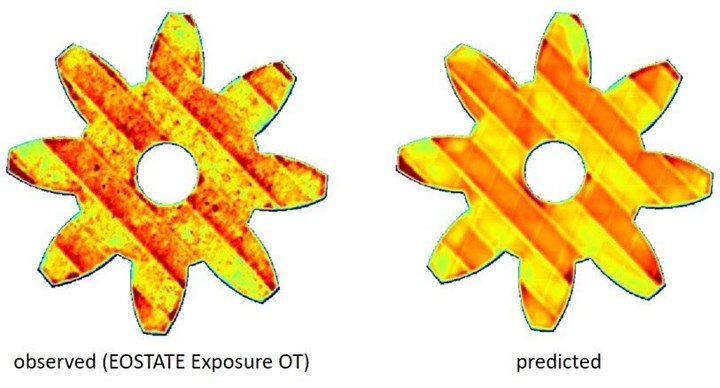

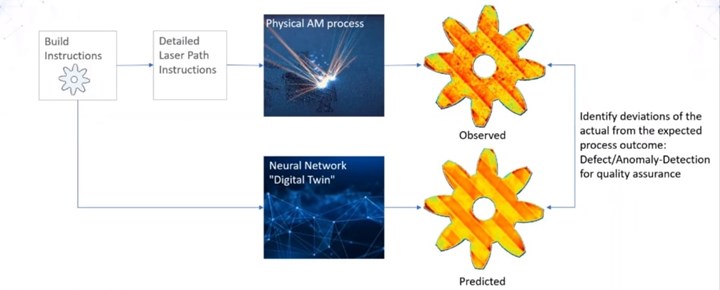

This amount of data granularity isn’t actually necessary, it turns out. Swiss company NNAISENSE has built a “deep digital twin” for direct metal laser sintering (DMLS) in collaboration with EOS, using a more holistic and far less labor-intensive approach. Instead of feeding the model individually labeled “trees” in the form of specific print parameters and pixel-level outputs, NNAISENSE feeds it something more like the “forest”: the optical tomography (OT) heat maps of each printed layer, correlated to process inputs such as part geometry, laser power and scan path. Unobservable effects such as gas flow don’t matter to the AI; what is important is how these factors interact to form a thermal footprint in each layer of the print — and that image provides enough information about both the system and the build to detect abnormalities, simulate results and even facilitate real-time process control.

Optical tomography (OT) images captured for every layer in a direct metal laser sintering (DMLS) build proved to be the right prediction target for NNAISENSE’s deep digital twin. Image: NNAISENSE

Building Cross-Industry AI Models

NNAISENSE is an off-shoot from IDSIA, the Swiss AI Lab, where the company's five co-founders first met while performing post-doctorate research. As their work began to attract calls from industry, the group decided to commercialize the technology by starting a new company in 2014. Today NNAISENSE has headquarters in Lugano, Switzerland, and Austin, Texas, and employs about 30 people. It serves a wide range of industries and applications and has built AI models for everything from drone maintenance of wind turbines to optimization of glass manufacturing. Now, working with EOS, it is bringing deep digital twins based on neural networks to a new domain, laser powder bed fusion (LPBF).

“We can’t advance AI without contact with customers,” says Faustino Gomez, CEO of NNAISENSE. “You can leverage knowledge that you gained in one domain and use it to benefit another. Things that we learned from the glass manufacturing process we can bring into additive manufacturing. The machine learning is sort of agnostic to the domain.”

Regardless of the domain, building a deep digital twin requires data, and lots of it. That means working out with the customer what information is already available, what additional data can be captured, and which pieces are important to the process. Sometimes data are not easily accessible and must be harvested, or sometimes the information exists but has been labeled for use by humans, not machine. In the case of AM, however, EOS already had plenty of data available captured through its EOState Monitoring Suite, which tracks everything from recoat errors to meltpool behavior during the build, and keeps this information correlated with the intended build instructions.

Unlike supervised machine learning where the model learns to recognize defects or other interest points from structured data such as images annotated by humans, NNAISENSE uses self-supervised learning to create deep digital twin process models. In this methodology, the computer is presented with curated time-series inputs from sensors and control actions, and learns on its own to predict the future values of those sensors.

A self-supervised deep learning strategy is the right choice for additive manufacturing in particular because of the sheer quantity of data generated in an LPBF build; the diversity of the parts; and a lack of insight into which variables contribute to which results. This strategy avoids the chance of humans introducing contradictions into the system and results in a modeling strategy that is more flexible, easier to implement and more broadly applicable than standard supervised machine learning.

“...totally optimizing it using conventional approaches is hopeless.”

“3D printed parts can vary tremendously in terms of geometry,” Gomez says, putting the scope of the challenge into perspective. “To get conventional machine learning to work, you would have to have humans going through thousands of layers for thousands of different parts. Even then, how do you draw the line with a bounding box in terms of the severity of the defect? In many cases you don’t really know what’s going to ultimately result in a defect. It’s sufficiently complicated that totally optimizing it using conventional approaches is hopeless.”

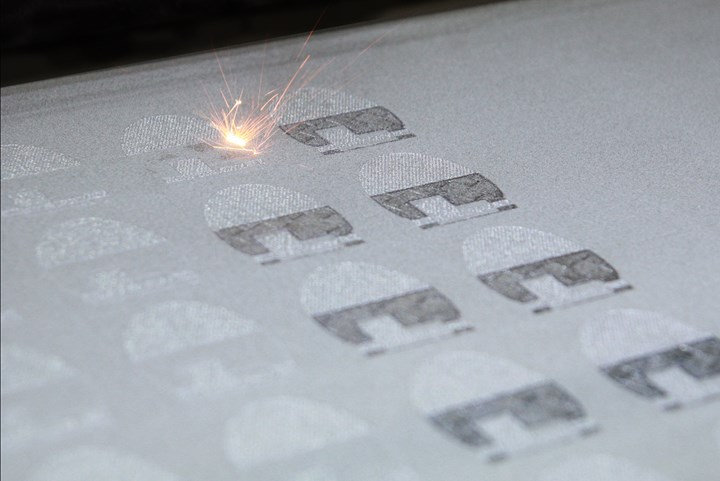

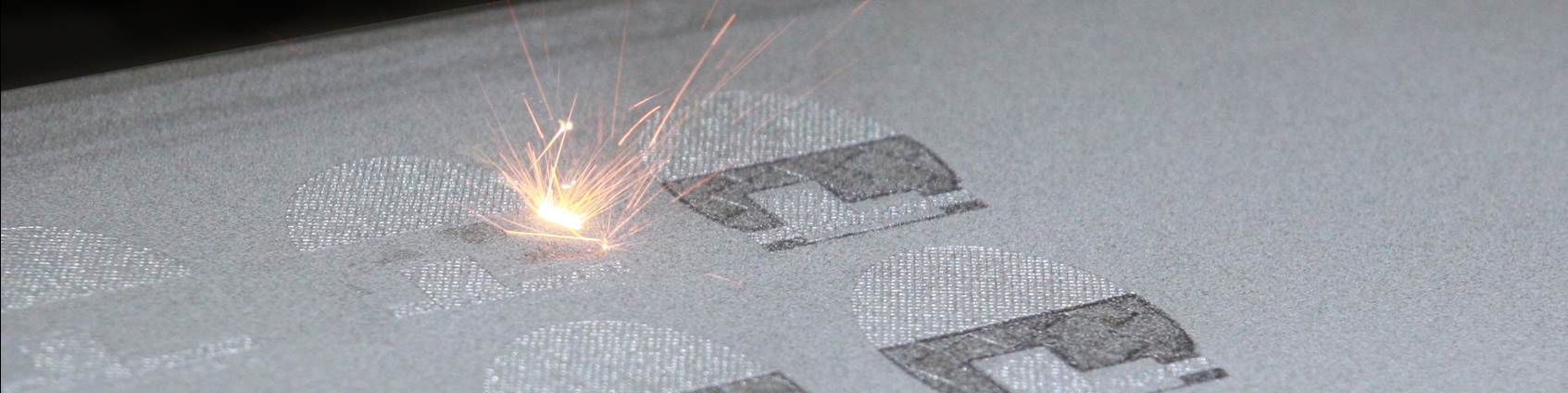

EOS direct metal laser sintering 3D printers (like these machines at Incodema3D) are widely used for everything from medical implants to aerospace parts. But DMLS could advance farther and more quickly with the assistance of self-supervised deep learning.

And while many factors can contribute to the success or failure of a laser powder bed fusion build, data do not necessarily exist for all of them. Smoke, for instance, can interfere with the operation of the laser but there is no existing sensor to detect this interference inside an EOS 3D printer. Rather than try to account for all of these effects in additive manufacturing, NNAISENSE focused its attention on the thermal images that EOState already captures of every layer with its Exposure OT camera, which reflect the conditions inside the printer as well as the original build instructions.

“What we said was, maybe it’s all in this data,” Gomez recounts. “Could we learn to predict what will happen on the next layer based solely on the geometry, the laser intensity and the laser path?”

Artificial Intelligence from Optical Tomography

The answer turned out to be yes. NNAISENSE was able to use build instructions from past EOS prints as inputs to train a model to predict the OT heat map of the next layer. This training was self-supervised by having the model check its layer predictions against the actual outcomes recorded in EOState, rather than human intervention.

The OT image works so well as a prediction target because direct metal laser sintering is a thermal process, and temperature affects both 3D printing and final part quality. Heat is necessary to melt and fuse the material, but heat distribution and buildup is the root cause of many issues in metal 3D printing. To achieve desired part properties consistently, the ideal condition would typically be homogoneous temperature distribution across the entire part, but in practice, parts develop hot and cold spots as a result of the laser scanning strategy, the gas flow and more. Thermal imaging captures the results of all these factors on each layer. It was therefore not necessary to teach the AI how the DMLS process works, only to provide a sufficient data set of build instructions along with their corresponding thermal images for it to learn from.

It was not necessary to teach the model how DMLS works or the physics of sintering metal. Instead, the AI learned the expected behavior for each successive printed layer by “studying” OT images from real builds. Photo Credit: EOS

“We did not inject any physics into the model,” Gomez says. “It was just able to learn internally the features it needed to, and how to weigh them to make the prediction.” From this training, the process model can now predict the heat distribution of each layer before it happens, and use this prediction to identify anomalies when they occur.

“Certain things are dictated by the build instructions, like the geometry and the tool paths,” Gomez says. The results derived from these instructions are predictable because they are systematic effects, things that are the result of the system itself and will be replicated if the process repeats. Any unpredictable effect outside of those instructions is a spurious deviation, he explains. Laser power and spot size are examples of factors that produce systematic, repeatable effects, while spurious deviations are unintentional occurrences such as spatters or smoke interfering with the laser. A model smart enough to know the difference can be useful in a number of ways, both on and off the machine.

The most obvious application for this AI is identifying defects in real time during the 3D printing build. “While you’re running the part, you can compare what actually happened to a probability map output by the model to see what is out of spec,” Gomez says. This information could be used for quality assurance down the road, or to stop the build entirely if a critical defect is identified.

The neural network functions as a digital twin to model expected behavior inside the printer. Comparing the predicted outcomes to the observed results makes it possible to identify any deviations. Image Credit: NNAISENSE

But stepping back farther in the additive manufacturing process, the model also makes it possible to evaluate the build instructions for a given job without ever having to make a part. The digital twin can function more generally as a simulation for certain aspects of the 3D printing process.

“You can basically use this model as a surrogate for the real thing,” Gomez says. “We can evaluate the intended build and say, the model is telling us this is going to produce a lot of hotspots or a lot of nonhomogeneous distribution of heat.” Coupled with other AI techniques such as deep reinforcement learning, the digital twin also makes it possible to “search through the space of possible build instructions to find the one that has the characteristics you want,” he explains. “Farther down the road, it could be involved in dealing with one of the thorniest problems in additive: machine calibration.”

The Promise of Self-Supervised Deep Learning for Additive Manufacturing

After several weeks of training on a GPU cluster using historic EOS build data, the model can now run on a single GPU and has been deployed at several EOS users in Europe. The AI model will enable these companies and future adopters to predict outcomes of new part designs with greater confidence and to select build instructions to achieve the goals of the application. Once a part is in production, any unanticipated effects in the actual build can be understood as spurious deviations, narrowing the field of possible causes and corrections. Process development will become faster and easier, and less reliant on legacy human knowledge that can be easily lost.

More broadly, though, the ability to create data-based models with minimal human supervision makes artificial intelligence much easier to apply effectively. Removing the need for human annotation means that an AI can be taught much more quickly and likely with less risk of encountering contradictions, which results in a more accurate and flexible architecture.

“...this digital twin is the essential precursor...”

Though the model has only been trained in direct metal laser sintering of titanium, NNAISENSE is confident that it can be expanded to more build materials and machine types with additional data and training. The overall impact for additive manufacturing might be less time spent on trial and error, on troubleshooting and on process correction; reduced waste from failed builds and prototyping; and ultimately, a more reliable and broadly applicable manufacturing method. It may even enable one elusive advance for laser powder bed fusion: in-process control.

“If you can predict what’s going to happen in the next layer, you could even use that to control the laser in real time,” Gomez says. “This digital twin is the essential precursor to that control.”

Related Content

Spatter, Surrogate Modeling and More Advanced Manufacturing Research Happening at Carnegie Mellon University: AM Radio #53

In this trip report, we discuss research at the Manufacturing Futures Institute and Next Manufacturing Center, both under CMU’s umbrella, to advance industry with robotics, AI and additive manufacturing.

Read MoreNCDMM Enhances CORE Platform, Debuts Roadmapper 2.0 Data Tool

The CORE online platform helps members identify, access and utilize intellectual capital assets that align with the group’s Technology Development Roadmap, while Roadmapper 2.0 complements CORE, enabling the sharing of interactive, digital road maps.

Read MoreAI-Assisted 3D Slicing Software Simplifies Dental 3D Printing Process

The software simplifies the 3D printing process so users don’t need special design training.

Read MoreVixiv Developing AI Alternative to Generative Design

Newly opened Ohio facility is where geometric cells are made and tested to inform the machine learning system that will “know,” without computation, what 3D printed form satisfies a given set of needs.

Read MoreRead Next

Machine Learning for Invention Will Aid and Be Aided By AM

Neural networks that learn by trial and error—learning, in fact, like a child—will map the constraints of AM systems, allowing part designs to be optimized for those systems.

Read MoreAI-Driven "Operating System" Makes Data Count

AI assistance could help additive manufacturing operations link engineering and design data to what is really happening on the factory floor.

Read MoreCrushable Lattices: The Lightweight Structures That Will Protect an Interplanetary Payload

NASA uses laser powder bed fusion plus chemical etching to create the lattice forms engineered to keep Mars rocks safe during a crash landing on Earth.

Read More

.jpg;width=70;height=70;mode=crop)